Object Detection is a powerful AI feature that analyzes images and video feeds to identify and locate objects in real time. It works by detecting specific objects, such as people, vehicles, or other defined items, and highlighting them with bounding boxes and labels.

This feature can be used for a variety of purposes, such as:

- Monitoring and Security: Detect objects of interest in surveillance footage.

- Automation: Automate processes, such as counting items or triggering actions when certain objects are detected.

- Real-Time Insights: Gain immediate data and insights from your live stream to make informed decisions.

With its versatility, Object Detection can transform your live video streams into actionable tools for various industries and use cases.

System requirements

The object detection operator requires additional prerequisites on both Windows and Linux platforms.

Models and Compatibility

Composer allows you to bring your own YOLO models for Object Detection, offering flexibility and customization for your specific use case. By using pre-trained models or training your own, you can tailor the detection process to match your application’s needs.

For more on how to train your own model, please refer to the Ultralytics YOLO documentation.

Requirements for models:

- Supported versions: YOLOv8, YOLOv9, YOLOv10, YOLOv11, YOLOv12

- Models exported to ONNX format using opset version 17.

yolo export model=path/to/[your_model].pt format=onnx opset=17from ultralytics import YOLO model = YOLO("path/to/[your_model].pt") model.export(format="onnx", opset=17)

ℹ️ Licensing for User-Supplied Models

- Commercial use of user-supplied YOLO models trained with Ultralytics tooling requires an Ultralytics Enterprise License.

- Composer does not provide or manage Ultralytics licenses.

For details, see Ultralytics Licensing.

Getting Started - Load a Model

To use Object Detection in Composer, the first step is to load your YOLO-trained ONNX (or CONNX model, such as Composer's BlackJack model) into Composer:

Click the "Load" button to open a file dialog. Browse to the location of your ONNX model file and select it.

Once loaded, the model's information will be displayed. Ex:

- Model Source: Current loaded model

- Model Size: Size in MB.

- Total Classes: Number of classes the model is trained to detect.

- Model License: Indicates whether the model uses YOLO licensing or is Composer-trained.

- Composer License: Confirms your license status for the model.

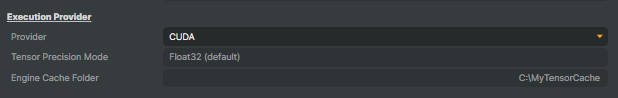

Select Execution Provider

Composer uses your NVIDIA GPU to accelerate object detection. With CUDA, you get reliable performance right out of the box. TensorRT goes a step further by optimizing your ONNX model for extra speed and efficiency, which is especially valuable in real-time streams. TensorRT is NVIDIA’s high-performance engine that optimizes your neural network model for fast and efficient execution on your GPU.

- Provider

Choose which execution provider Composer will use for object detection:- CUDA – Default. Acceleration using your NVIDIA GPU.

- TensorRT – Optimizes your model for higher speed and efficiency.

- Tensor Precision Mode (TensorRT only)

Control the balance between speed and accuracy- Float32 – Default. Provides the highest detection accuracy, ideal when precision is most important.

- Float16 – Faster processing with minimal loss of accuracy, perfect for real-time performance

- Engine Cache Folder - Windows/Linux (TensorRT only)

Specify an absolute folder path with read and write permissions on your current operating system (Windows or Linux) where Composer will save the optimized TensorRT engine file. Make sure the folder exists on the machine that will run the project and that the folder path uses the correct syntax for your operating system.- Windows:

c:\path\to\folder - Linux:

/home/<username>/path/to/folder

- Windows:

Model Input Resize Mode

The Model Input Resize Mode option determines how Composer will resize each video frame before feeding it into the model for object detection processing. You can choose between two resize modes: Proportional and Stretched. Select the best mode based on the dataset image resizing the model was trained on to achieve optimal performance.

- Proportional Mode (default):

In this mode, Composer resizes each frame proportionally to fit within the model's input dimensions. The aspect ratio of the original frame is preserved, and padding may be added to maintain the model's required dimensions. Ex:

- Stretched Mode:

This mode directly resizes the frame to match the model's input dimensions, regardless of the aspect ratio. This can result in distortion if the original aspect ratio differs significantly from the model's input dimensions.

While this can cause distortion if the aspect ratio differs significantly, it may also help retain image details depending on the dataset the model was trained on. Ex:

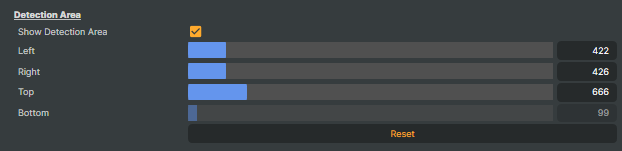

Detection Area

The Detection Area option allows you to define a specific area on the screen where object detection will take place. This can be useful if you only want to detect objects within a particular region of interest rather than processing the entire image.

You have five options to configure the Detection Area:

- Show Detection Area: This option displays a box on the screen, marking the area of interest for visual confirmation. It helps you see exactly where object detection will be focused, allowing for easy adjustments.

- Left: Resize and move the detection area from the left side of the screen.

- Right: Resize and move the detection area from the right side of the screen.

- Top: Resize and move the detection area from the top of the screen.

- Bottom: Resize and move the detection area from the bottom of the screen.

Click on the Reset button to reset if needed.

This feature is particularly useful if you want to focus object detection on a specific part of the video feed, reducing unnecessary processing and improving performance.

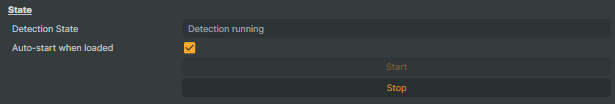

Start and Stop Object Detection

To activate Object Detection, click Start to activate and Stop to deactivate.

If you wish the Operator to start Object Detection automatically, simply check the Autostart checkbox and save your project. Next time you start composer, Object Detection will start automatically.

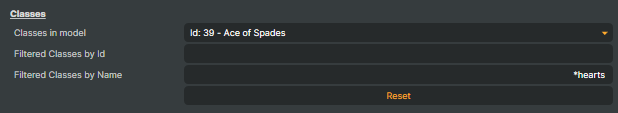

Classes

The Classes section allows you to filter the classes the model detects so you can focus on specific objects of interest. If the model has been trained to detect multiple object classes but you are only interested in a few, these options can help you narrow down the detection results.

There are three options available in the Classes section:

- Classes In Model:

A dropdown that displays all the classes and their associated IDs the model is capable of detecting. This allows you to see exactly what the model was trained to recognize. - Filter Classes By Id:

Enter the class IDs of the objects you want to detect. These IDs should be entered as a comma-separated list. Only the classes corresponding to the specified IDs will be included in the detection results; all other objects will be excluded. - Filter By Class Name:

Similar to "Filter Classes By Id", this option allows you to filter detections by class names. Enter the names of the classes you’re interested in as a comma-separated list. You can also use wildcards (*) before and after class names for more flexible filtering. For example, if you want to detect all cards with the name "hearts" from a deck, you could enter *hearts to filter all classes whose names end with "hearts" or *ace* to filter all objects containing “ace”.

These filtering options help you fine-tune the detection process by focusing on the classes that are most relevant to your use case, reducing the amount of irrelevant data and improving the detection efficiency.

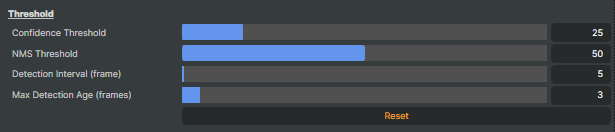

Threshold

The Threshold section allows you to adjust various threshold values that control the behavior and accuracy of the object detection. These settings help fine-tune how the model performs and how often detections are made.

- Confidence Threshold:

This value sets the minimum confidence level required for the model to consider an object as detected. The confidence threshold is specified as a percentage, ranging from 10% to 100%. A higher value means only objects with higher confidence will be considered valid detections. For example, a setting of 70% means only objects with a detection confidence score of 70% or higher will be recognized. - NMS Threshold (Non-Maximum Suppression):

This threshold controls the level of overlap allowed between multiple detections of the same object. Non-maximum suppression (NMS) helps eliminate redundant bounding boxes by keeping only the one with the highest confidence score. The NMS threshold determines how much overlap is permissible before a second bounding box is suppressed. Lower values will result in fewer detections being retained. - Detection Interval:

This setting controls how frequently object detection occurs. A value of 0 means detection is performed on every frame. If you set the detection interval to 5, detection will only occur on every 5th frame. This is useful for reducing computational load if you don’t need to perform detection on every frame of the video. - Max Detection Age:

This setting determines how many frames a previous detection will be displayed in case of frame loss. For example, if the model loses track of a detected object, the detection will persist for the number of frames specified in this setting. This helps maintain detection continuity in case of brief occlusions.

These threshold settings allow you to adjust detection sensitivity, performance, and how long detected objects are displayed, providing greater control over the detection process.

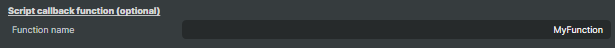

Script Callback Function

The Script Callback Function is an optional setting that allows you to define the name of a custom function to be triggered when objects are detected. This feature is designed for advanced users who want to automate actions with Composer’s powerful Script Engine.

By specifying a function name, Composer will call the defined function whenever an object detection event occurs. This enables you to:

- Trigger custom actions when specific objects are detected.

- Update scenes, layers, or other elements within the application.

- Integrate with external systems or APIs based on object detection results.

For example, you could write a script to change a scene whenever a certain object is detected and update on-screen texts, counters, or log detection events for further analysis.

This feature provides flexibility for automating workflows and enhancing the functionality of Composer according to your specific needs. Read more about Script Engine here.

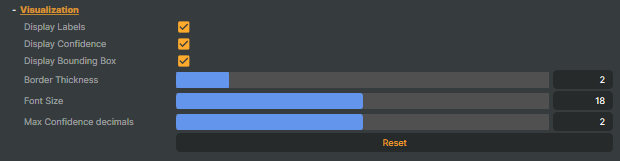

Visualization

The Visualization section provides options for customizing the visual display of detected objects on the screen. These settings allow you to modify how detection results are shown, making it easier to interpret and analyze the objects detected by the model.

The following options are available:

- Display Labels:

This option enables the display of labels for detected objects. When enabled, the label (such as "person", "car", etc.) will be shown next to the object’s bounding box for easy identification. - Display Confidence:

This option displays the confidence value of each detected object. The confidence value indicates how certain the model is about the detection, expressed as a percentage (e.g., 85%). Enabling this option will show the confidence score next to each detected object’s label. - Display Bounding Box:

This option draws a bounding box around each detected object. The box will highlight the area where the object was detected in the frame, making it visually clear where the detection occurred. - Border Thickness:

This setting controls the thickness of the bounding box borders. By adjusting this value, you can make the bounding boxes more visible or less intrusive, depending on your preference. - Font Size:

This setting defines the font size used when displaying labels and confidence values. You can adjust the font size to ensure the text is easily readable or fits better within the visual space of the frame. - Max Confidence decimals:

Specifies how many decimal places (0–4) are shown for confidence scores in the on-screen display. Values are rounded to the nearest decimal based on the setting. This only affects visualization, not the underlying detection results.

These options provide full control over the visual presentation of detection results, allowing you to tailor the display to your needs.

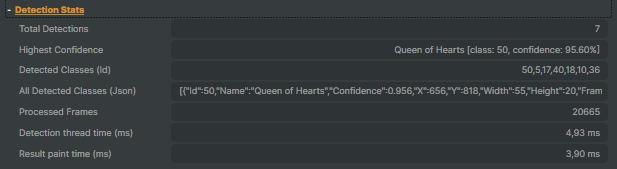

Detection Stats

The Detection Stats show what the model is currently detecting.

- Total Detections:

Displays the total number of objects found in the current frame. - Highest Confidence:

Displays the object with the highest Confidence score in the current frame. - Detected Classes (ID):

A comma-separated string with IDs of all detected objects. - All Detected Classes (JSON):

A JSON string with all objects, IDs, labels, bounding boxes, confidence scores, etc, that can be used for further processing with Composer's Script Engine. - Processed Frames:

Total frames processed by the object detection model. - Detection thread time (ms):

Displays the time in milliseconds taken by the detection thread to perform inference on the current frame. - Result paint time (ms):

Displays the time in milliseconds required to render (draw) the detection results on screen. The value depends on which visualization options (labels, bounding boxes, confidence) are enabled.

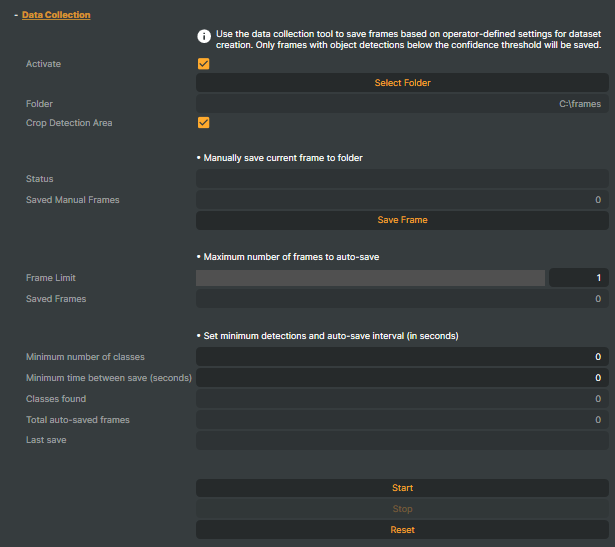

Data Collection

The Data Collection feature allows you to save video frames that contain detected objects for later use. This helps you analyze your object detection results more closely or build and improve your object detection datasets. You can collect frames either manually or automatically based on your preferences.

Example of use cases:

- Manually capture specific frames you want to review or annotate later.

- Automatically save frames when detected objects have confidence levels below a set threshold — helpful for spotting challenging cases where the model needs improvement.

- Build custom datasets by collecting real-world examples to retrain or fine-tune your models.

Select folder

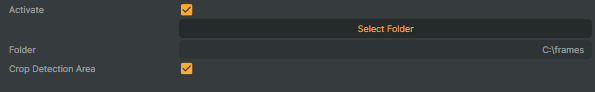

- Activate

This checkbox enables or disables the Data Collection feature. - Select Folder

Click this button to choose the folder where all collected frames will be saved. - Crop Detection Area

Crops each saved frame to the defined Detection Area.

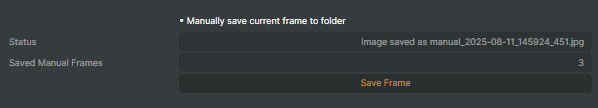

Manual Save

- Status

This area shows a message indicating whether the current frame was successfully saved or not, helping you monitor the Data Collection process in real time. - Saved Manual Frames

Displays the total number of frames you have saved manually so far. This helps you keep track of your manual data collection progress. - Save Frame

Click this button to manually save the current video frame immediately. Use this to capture important frames on demand for later analysis or annotation.

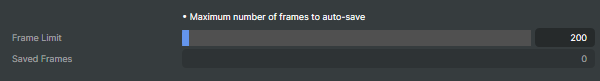

Automatic Save

- Frame Limit

Set the maximum number of frames the operator will save automatically. You can choose any value between 0 and 100,000. Setting this limit helps control storage use by restricting how many frames are collected automatically. - Saved Frames

Shows the total number of frames that have been saved automatically so far. This helps you keep track of the automatic data collection progress.

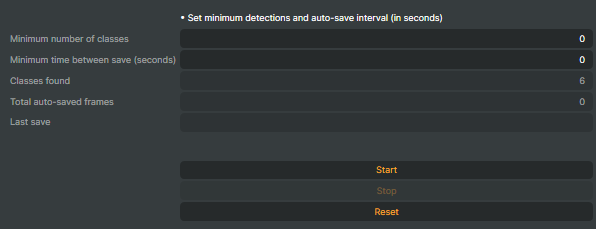

Save Conditions

- Minimum Number of Classes

Set the minimum number of detected objects required for a frame to be saved automatically. Choose any value from 0 to 1000. This ensures only frames with enough relevant detections are collected, helping you filter out less useful data. - Minimum Time Between Saves (seconds)

Specify the minimum number of seconds that must pass after the last saved frame before a new frame can be saved. This helps prevent saving too many frames in quick succession, managing storage and processing resources efficiently. - Classes Found

Displays the number of different object classes detected in the frame that was just saved. This gives you insight into what the model identified at the moment of capture. - Total Auto-Saved Frames

Shows the total count of all frames saved automatically so far. This helps you track your automatic data collection progress over time. - Last Save

Displays the exact time when the last frame was saved. This helps you monitor recent activity and understand the frequency of automatic saves.